18 KiB

| title | date | tags |

|---|---|---|

| Vault Secrets Into K8s | 2024-04-21T19:51:06-07:00 | [homelab k8s vault] |

Continuing my [recent efforts]({{< ref "/posts/oidc-on-k8s" >}}) to make authentication on my homelab cluster more "joined-up" and automated, this weekend I dug into linking Vault to Kubernetes so that pods could authenticate via shared secrets without me having to manually create the secrets in Kubernetes.

As a concrete use-case - currently, in order for Drone (my CI system) to authenticate to Gitea (to be able to read repos), it needs OAuth credentials to connect. These are provided to Drone in env variables, which are themselves sourced from a secret. In an ideal world, I'd be able to configure the applications so that:

- When Gitea starts up, if there is no OAuth app configured for Drone (i.e. if this is a cold-start situation), it creates one and writes-out the creds to a Vault location.

- The values from Vault are injected into the Drone namespace.

- The Drone application picks up the values and uses the to authenticate to Gitea.

I haven't taken a stab at the first part (automatically creating a OAuth app at Gitea startup and exporting to Vault), but injecting the secrets ended up being pretty easy!

Secret Injection

There are actually three different ways of providing Vault secrets to Kubernetes containers:

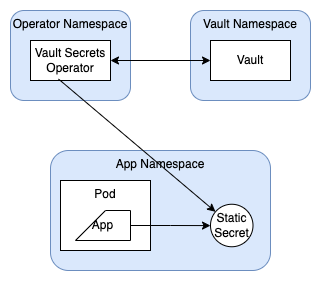

- The Vault Secrets Operator, which syncs Vault Secrets to Kubernetes Secrets.

- The Vault Agent Injector, which syncs Vault Secrets to mounted paths on containers.

- The Vault Proxy, which can act as a (runtime) proxy to Vault for k8s containers, simplifying the process of authentication1.

I don't think that Drone's able to load OAuth secrets from the filesystem or at runtime, so Secrets Operator it is!

The walkthrough here was very straightforward - I got through to creating and referencing a Static Secret with no problems, and then tore it down and recreated via IaC. With that in place, it was pretty easy to (convert my Drone specification to jsonnnet and then to) create a Kubernetes secret referencing the Vault secrets. I deleted the original (manually-created) secret and deleted the Drone Pod immediately before doing so just to check that it worked - as I expected, the Pod failed to come up at first (because the Secret couldn't be found), and then successfully started once the Secret was created. Works like a charm!

Further thoughts

Type-safety and tooling

I glossed over a few false starts and speedbumps I faced with typoing configuration values - adddress instead of address, for instance. I've been tinkering with cdk8s at work, and really enjoy the fact that it provides Intellisense for "type-safe" configuration values, prompting for expected keys and warning when unrecognized keys are provided. Jsonnet has been a great tool for factoring out commonalities in application definitions, but I think I'm overdue for adopting cdk8s at home as well!

Similarly, it's a little awkward that the Secret created is part of the app-of-apps application, rather than the drone application. I structured it this way (with the Vault CRDs at the top-level) so that I could extract the VaultAuth and VaultStaticSecret to a Jsonnet definition so that they could be reused in other applications. If I'd put the auth and secret definition inside the charts/drone specficiation, I'd have had to figure out how to create and publish a Helm Library to extract them. Which, sure, would be a useful skill to learn - but, one thing at a time!

Dynamic Secrets

I was partially prompted to investigate this because of a similar issue we'd faced at work - however, in that case, the authentication secrets are dynamically-generated and short-lived, and client apps will have to refetch auth tokens periodically. It looks like the Secrets Operator also supports Dynamic Secrets, whose "lifecycle is managed by Vault and [which] will be automatically rotated". This isn't quite the situation we have at work - where, instead, a fresh short-lived token is created via a Vault Plugin on every secret-read - but it's close! I'd be curious to see how the Secrets Operator can handle this use-case - particularly, whether the environment variable on the container itself will be updated when the secret is changed.

Immutable Secrets - what's in a name?

There's a broader question, here, about whether the value of secrets should be immutable over the lifespan of a container. Google's Container Best Practices2 suggest that "a container won't be modified during its life: no updates, no patches, no configuration changes.[...]If you need to update a configuration, deploy a new container (based on the same image), with the updated configuration.". Seems pretty clear cut, right?

Well, not really. What is the configuration value in question, here? Is it the actual token which is used to authenticate, or is it the Secret-store path at which that token can be found?

- If the former, then when the token rotates, the configuration value has been changed, and so a new container should be started.

- If the latter, then a token rotation doesn't invalidate the configuration value (the path). The application on the container can keep running - but will have to carry out some logic to refresh its (in-memory) view of the token.

When you start to look at it like that, there's plenty of precedent for "higher-level" configuration values, which are interpreted at runtime to derive more-primitive configuration values:

- Is the config value "how long you should wait between retries", or "the rate at which you should backoff retries"?

- Is it "the colour that a button should be", or "the name of the A/B test that provides the treatments for customer-to-colour mappings"?

- Is it "the number of instances that should exist", or "the maximal per-instance memory usage that an auto-scaling group should aim to preserve"?

Configuration systems that allow the behaviour of a system to change at runtime (either automatically in response to detected signals, or as induced by deliberate human operator action) provide greater flexibility and functionality. This fuctionality - which is often implemented by designing an application to regularly poll an external config (or secret) store for the more-primitive values, rather than to load them once at application startup - comes at the cost of greater tooling requirement for some desirable operational properties:

- Testing: If configuration-primitives are directly stored-by-value in Git repos3 and a deployment pipeline sequentially deploys them, then automated tests can be executed in earlier stages to provide confidence in correct operation before promotion to later ones. If an environment's configuration can be changed at runtime, there's no guarantee (unless the runtime-configuration system provides it) that that configuration has been tested.

- Reproducibility: If you want to set up a system that almost-perfectly4 reproduces an existing one, you need to know the configuration values that were in place at the time. Since time is a factor (you're always trying to reproduce a system that existed at some time in the past, even if that's only a few minutes prior), if runtime-variable and/or pointer-based configurations are effect, you need to refer to an audit log to know the actual primitives in effect at that time.

These are certainly trade-offs! As with any interesting question, the answer is - "it depends". It's certainly the case that directly specifying primitive configuration is simpler - it "just works" with a lot of existing tooling, and generally leads to safer and more deterministic deployments. But it also means that there's a longer reflection time (time between "recording the desire for a change in behaviour in the controlling system" and "the changed behaviour taking effect"), because the change has to proceed through the whole deployment process5. This can be unacceptable for certain use-cases:

- operational controls intended to respond in an emergency to preserve some (possibly-degraded) functionality rather than total failure.

- updates to A/B testing or feature flags.

- (Our original use-case) when an authentication secret expires, it would be unacceptable for a service that depends on that secret to be nonfunctional until configuration is updated with a new secret value6. Much better, in this case, for the application itself to refresh its own in-memory view of the token with a refreshed one. So, in this case, I claim that it's preferable to treat "the path at which an authentication secret can be found" as the immutable configuration value, rather than "the authentication secret" - or, conversely, to invert responsibility from "the application is told what secret to use" to "the application is responsible for fetching (and refreshing) secrets from a(n immutable-over-the-course-of-a-container's-lifecycle) location that it is told"

To be clear, though, I'm only talking here about authentication secrets that have a specified (and short - less than a day or so) Time-To-Live; those which are intended to be created, used, and abandoned rather than persisted. Longer-lived secrets should of course make use of the simpler and more straightforward direct-injection techniques.

What is a version?

An insightful coworker of mine recently made the point that configuration should be considered an integral part of the deployed version of an application. That is - it's not sufficient to say "Image tag v1.3.5 is running on Prod", as a full specification should also include an identification of the config values in play. When investigating or reasoning about software systems, we care about the overall behaviour, which arises from the intersection of code and configuration7, not from code alone. The solution we've decided on is to represent an "application-snapshot" as a string of the form "<tag>:<hash>", where <tag> is the Docker image tag and <hash> is a hash of the configuration variables that configure the application's behaviour8.

Note that this approach is not incompatible with the ability to update configuration values at runtime! We merely need to take an outcome-oriented view - thinking about what we want to achieve or make possible. In this case, we want an operator investigating an issue to be prompted to consider proximate configuration changes if they are a likely cause of the issue.

- Is the configuration primitive one which naturally varies (usually within a small number/range of values) during the normal course of operation? Is it a "tuning variable" rather than one which switches between meaningfully-different behaviours? Then, do not include it as a member of the hash. It is just noise which will distract rather than being likely to point to a cause - a dashboard which records multiple version updates every minute is barely more useful than one which does not report any.

- Though, by all means log the change to your observability platform! Just don't pollute the valuable low-cardinality "application version" concept with it.

- Is the configuration primitive one which changes rarely, and/or which switches between different behaviours? Then, when it is changed (either automatically as a response to signals or system state; or by direct human intervention), recalculate the

<hash>value and update it while the container continues running9.

-

Arguably this isn't "a way of providing secrets to containers" but is rather "a way to make it easier for containers to fetch secrets" - a distinction which actually becomes relevant later in this post... ↩︎

-

And by describing why that's valuable - "Immutability makes deployments safer and more repeatable. If you need to roll back, you simply redeploy the old image." - they avoid the cardinal sin of simply asserting a Best Practice without justification, which prevents listeners from either learning how to reason for themselves, or from judging whether those justifications apply in a novel and unforeseen situation. ↩︎

-

which is only practical for non-secret values anyway - so we must always use some "pointer" system to inject secrets into applications. ↩︎

-

You almost-never want to perfectly reproduce another environment of a system when testing or debugging, because the I/O of the environment is part of its configuration. That is - if you perfectly reproduced the Prod Environment, your reproduction would be taking Production traffic, and would write to the Production database! This point isn't just pedantry - it's helpful to explicitly list (and minimize) the meaningful ways in which you want your near-reproduction to differ (e.g. you probably want the ability to attach a debugger and turn on debug logging, which should be disabled in Prod!), so that you can check that list for possible explanations if your env cannot reproduce behaviour observed in the original. Anyone who's worked on HTTP/S bugs will know what I mean... ↩︎

-

where the term "deployment process" could mean anything from "starting up a new container with the new primitive values" (the so-called "hotfix in Prod"), to "promoting a configuration change through the deployment pipeline", to "building a new image with different configuration 'baked-in' and then promoting etc....", depending on the config injection location and the degree of deployment safety enforcement. In any case - certainly seconds, probably minutes, potentially double-digit minutes. ↩︎

-

An alternative, if the infrastructure allowed it, would be an "overlapping rotation" solution, where the following sequence of events occurs: 1. A second version of the secret is created. Both

secret-version-1andsecret-version-2are valid. 2. All consumers of the secret are updated tosecret-version-2. This update is reported back to the secret management system, which waits for confirmation (or times out) before proceeding to... 3.secret-version-1is invalidated, and onlysecret-version-2is valid. Under such a system, we could have our cake and eat it, too - secrets could be immutable over the lifetime of a container, and there would be no downtime for users of the secret. I'm not aware of any built-in way of implementing this kind of overlapping rotation with Vault/k8s - and, indeed, at first thought the "callbacks" seem to be a higher degree of coupling than seems usual in k8s designs, where resources generally don't "know about" their consumers. ↩︎ -

Every so often I get stuck in the definitional and philosophical rabbit-hole of wondering whether this is entirely true, or if there's a missing third aspect - "behaviour/data of dependencies". If Service A depends on Service B (or an external database), then as Service B's behaviour changes (or the data in the database changes), then a given request to Service A may receive a different response. Is "the behaviour" of a system defined purely in terms of "for a given request, the response should be (exactly and explicitly) as follows...", or should the behaviour be a function of both request and dependency-responses? The answer - again, as always - is "it depends': each perspective will be useful at different times and for different purposes. Now that you're aware of them both, though, be wary of misunderstandings when two people are making different assumptions! ↩︎

-

which requires an enumeration of said variables to exist in order to iterate over them. Which is a good thing to exist anyway, so that a developer or operator knows all the levers they have available to them, and (hopefully!) has some documentation of their intended and expected effects. ↩︎

-

I should acknowledge that I haven't yet confirmed that work's observability platform actually supports this. It would be a shame if they didn't - a small-minded insistence that "configuration values should remain constant over the lifetime of a container" would neglect to acknowledge the practicality of real-world usecases. ↩︎