What is this?

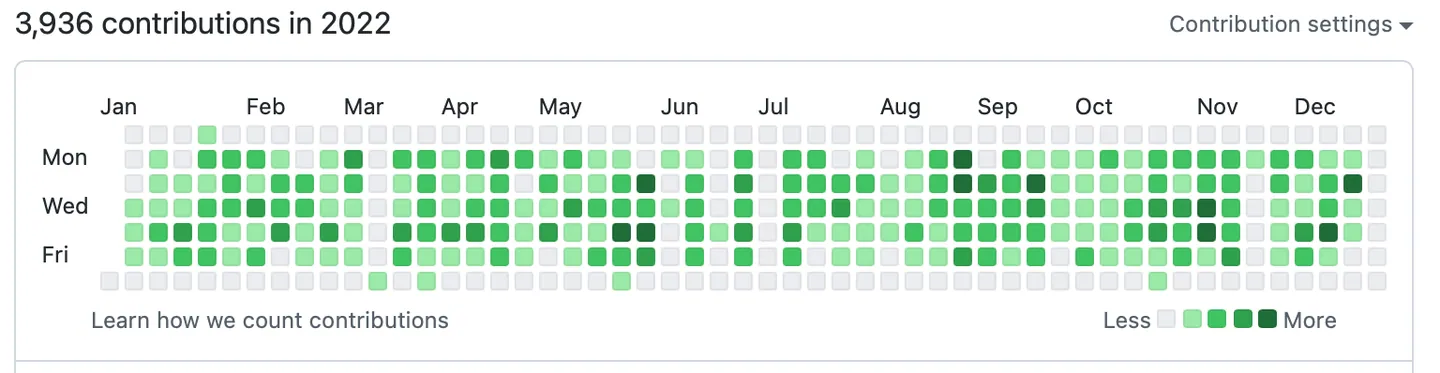

This is the code for a Gitea Action that can be used to generate GitHub Contribution History based on the commits you have made to the the source repo.

Onboarding

Prerequisites

- Repos must use

mainas the default branch. Come on, it's been like 5 years.- ...ok fine I will consider adding customization for this. But not a priority to begin with.

- No two source repos can have commits that happened at the exact same time. It would be technically possible to implement this1, but it would be an arse: finding "the first commit in RepoB earlier than or equal to commit-X in RepoA" is a query that, under this constraint only, can then be used to immediately determine "does this match the resultant description required in RepoB? If not, we write-in; if so, we assume that this source commit has already been represented, and skip it in", whereas if two commits can have identically-timed commits, it can be the case that in fact we find a target commit ("latest before the given source commit") that doesn't match the source commit, even though a matching target commit could exist (at the same time, but earlier by

git logsub-ordering), resulting in a loop where two repos would keep inserting themselves after the others' equivalent-time representation.- ...I think a diagram would help here if I had to explain it to someone :

Explicitly ok - you can run this on a target repo containing no commit, it's not necessary to create an awkward README.md-only commit to "prime" the repo2.

Not a prerequisite, but a practicality note - I think this will has a runtime3 which is quasi-quadratic - i.e. O(<number_of_commits_requested_to_sync_from_source_repo> * <number_of_commits_in_target_repo_after_that>). Ideally, you should limit to only syncing a limited number of commits - if you trigger it on every push of the source repo, it'll be kept up-to-date from there on. That does mean that you won't get any retroactive history, though - so, by all means try a one-off limit: 0 to sync "everything from all time in the source repo" (TODO - haven't actually implemented that behaviour yet!); but if that times-out, maybe use Binary Search to figure out how many commits would complete in whatever your runtime limitation is, then accept that as your available history? Or temporarily acquire a runtime executor with more runtime (that's fancy-speak for - instead of running it on your CI/CD Platform, run it on a developer's workstation and leave it on overnight :P)

If a target-repo gets so unreasonably large that even the runtime of syncing it from a single commit is too high, then I guess you could trigger writing to different target repos from each source repo in some hash-identified way - but, as I've said many times already in this project, YAGNI :P

Usage

TODO - provide example .gitea/workflows/ file here showing usage (you can look at the self-triggering example in this repo's own directory, but we could do better by using the context variables and an external secrets store)

FAQs

Why not just use Gitea's existing mirroring functionality?

(As described here)

Four reasons (I do not claim that they are equally convincing):

- Repository-syncing is, AFAIK, set from a UI interaction - there is no listed API for it (so it can't be activated programmatically), nor is it possible to activate it by putting a templated metadata/configuration file in the repo, as with Dependabot, etc. (so it can't be part of a repository template). By contrast, if this functionality is activated via

workflows/*.yamlfile, it can be created in a freshly-initialized repository from a (cookiecutter, or similar) template. - Many (although not necessarily all) self-hosters see virtue in reducing the value of GitHub. The more code is hosted elsewhere than it - even if GH has an "index" of that code - the more normalized decentralized and federated systems will become. Those who self-host Gitea, therefore, are likely to enjoy the opportunity to benefit from GitHub's status as a well-understood and discoverable by integrating with its recognition product, while denying it much of the value of the code itself.

- Writing code for yourself helps you understand the systems and tools better.

- This is an project that feels easily within my capabilities, but still sizable enough to have several moving parts. As I'm in an early test period of figuring out whether Cursor (and AI Dev Tools in general) are actually worth it, so using them on a project that doesn't stretch me in other ways is sensible - only change one thing at a time to see whether it makes a difference! If I'd taken on a hard project and tried to use Cursor for it, I would have been simultaneously spending brain power on "solving the problem" as well as "learning the tool" - reducing my ability to focus on the latter. I also might have been tempted to use the tool to answer in areas where I would have had zero capability to check it for correctness, thus reducing my ability to accurately evaluate the tool's utility.

Doesn't this already exist?

Not that I know of. In particular - this merely generates "noise", not based on real commits. It is clearly intended to falsify a GitHub contribution history; whereas this is intended to make sure it accurately reflects commits, even those recorded on a different forge.

Incidentally, this is also kinda my answer to a different FAQ: Isn't this forbidden by ToS?? I mean...I don't think so, because if that other repo is still up and unbanned, you gotta assume GitHub is fine with this. I don't see any moral reason why it should be forbidden, either:

- It doesn't consume an unreasonable amount of system resources (less, in fact, that Gitea mirroring would do, because less file data is being transferred)

- No lie is being told about productivity - the commits being represented are real commits, no-one is lying about how much work they've done (unlike with the other repo).

The UI shows all the commits as just having been created! This thing is broken!

...Form of a question?

But, nah, it's not - well, I haven't tested on GitHub yet, but at least on Gitea the "commit history" page shows the push time of commits, not their actual commit time. You can confirm their commit time by clicking into the actual commit's detail page, then hovering over the date there - which is the commit time (also visible in git log if you check it out).

I gotta assume that that's enough for GitHub history rewrite to be legal, if that other repo works. If not...I will consider myself the most-trolled I've ever been...

-

Actually I don't know if it would be possible to implement this - I don't know if

gitwould even allow two commits at the same time in a target repo. Gotta assume it does given how many folks love to trumpet the fact that they have monorepos - at the scale of possible-committers they're at (especially considering automated tools making updates) there's gotta be potential for a time-collision - just not earlier than parent seems like a reasonable constraint. Might be fun to write a test for "child-younger-than-parent", but I think any such repo is f$^*-ed up enough to justify not supporting it. But, ok then, due to that potential, I guess technically another prerequisite is actually No source repo may have a parent that happened later than a child. If you had to read this far into the small print to figure this out when you encountered an error, then, frankly, you brought this on yourself and I hope you are feeling suitably embarassed 😜 ↩︎ -

I guess another feature request could be - if the target repo doesn't exist, create it! I'm going to rephrase my common refrain here to IAGNI - *I ain't gonna need it. But if you want it for some reason (idk why you would be programmatically creating target repos unless some serious scale is going on...and I kinda wanna calculate that now...Hmm, TODO), ask for it and it shouldn't be hard for me to add it. ↩︎

-

Note that no network resources or API calls are used in this quadratic period, so you don't run a risk of overloading anything (including your wallet). These potentially-costly operations are linear in number in repo size - two pulls, one push - though note of course that pulling a larger repo takes more literal throughput. But I'm not aware of any provider that has significant limitations on that? Because, y'know, monorepos exist at large companies and are (one assumes?) regularly pulled (at least once every time a developer onboards), and you are not gonna get near their sizes unless you're a company (and if you are, pay me to implement that feature :P ) ↩︎